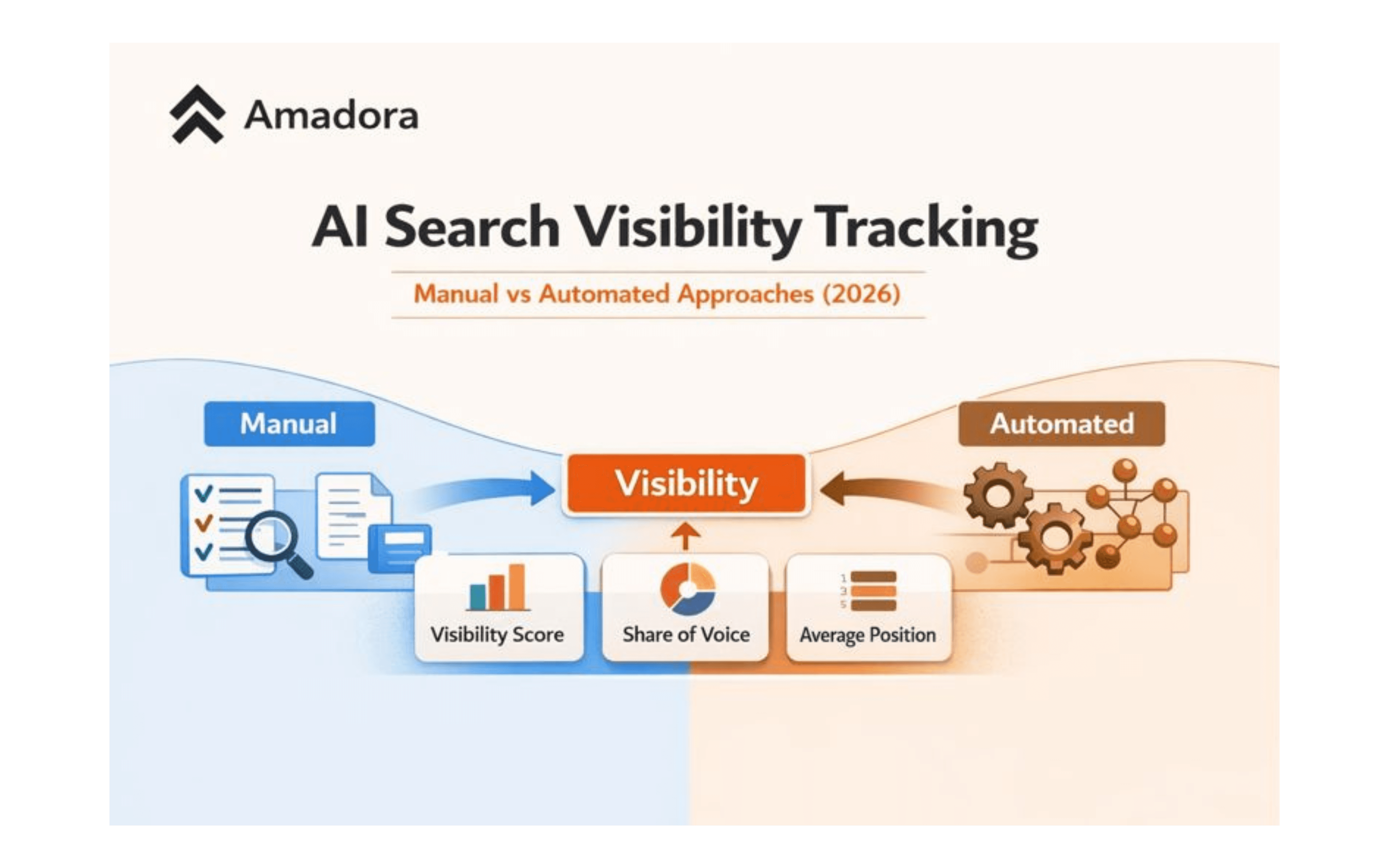

AI Search Visibility Tracking in LLMs (2026): Manual vs Amadora.ai

Learn how AI search visibility tracking works in LLMs. This 2026 guide compares manual tracking vs Amadora and explains the metrics that matter: visibility score, share of voice, and average position.

Massi Hashemi

Product Marketing Manager

Are you actually visible in AI-generated answers, or are competitors being recommended instead?

AI search visibility tracking is the process of measuring whether, where, and how often your brand appears inside AI-generated answers across LLM-powered experiences such as AI Overviews and conversational search tools.

This matters in 2026 because discovery is moving from “blue links” to direct answers. If your brand is not present in the answer, you can lose demand even when your traditional SEO performance looks strong.

TL;DR

AI search visibility is not the same as SEO rankings. It measures brand presence inside generated answers.

Manual tracking helps with learning but becomes unreliable at scale due to variance and time cost.

Amadora tracks AI visibility using Visibility score, Share of voice, and Average position.

The most effective teams connect visibility to prompts, competitors, and citations, then act on the gaps.

If you want consistent reporting over time, automation is required.

What is AI search visibility?

AI search visibility describes how your brand appears inside AI-generated answers: whether you are mentioned, how often, how prominently, and in what competitive context.

A practical definition:

AI search visibility = measurable brand presence in AI-generated answers for a defined prompt set, across platforms and time.

It helps you answer:

Do we show up at all for the questions customers ask?

Are we mentioned more or less than competitors?

Are we surfaced early in the answer, or buried?

Which sources does AI rely on when it talks about us?

How AI search visibility can be tracked manually

Manual tracking is often the fastest way to get started, especially if you want to understand how your category is represented in AI answers.

Manual tracking method (simple and repeatable)

Create a prompt set (20–50 prompts)

Discovery prompts (“best X for Y”)

Comparison prompts (“X vs Y”)

Evaluation prompts (“what should I look for in X?”)

Fix your variables

Platforms to test

Location and language (if relevant)

Cadence (weekly or bi-weekly)

Run the same prompts consistently

Do not rewrite prompts each time

Capture the full response text

Record structured fields

Mentioned? (Yes / No)

Position in the answer (top / middle / bottom)

Competitors mentioned

Citations present? (Yes / No)

Source domains (if visible)

Repeat for 2–4 weeks

Patterns matter more than any single run

Manual tracking is best for learning and intuition-building.

Limitations of manual AI search visibility tracking

Manual tracking breaks down once you try to treat it as a reliable metric.

Common limitations include:

Variance: small prompt changes can lead to very different answers

Low repeatability: runs are hard to compare over time

Time cost: maintaining prompt sets becomes expensive

Bias: teams test prompts they expect to win, which hides real gaps

A simple rule:

Manual tracking is useful for discovery. Automated tracking is better for measurement.

How automated AI search visibility tracking works (and why Amadora is built for it)

Automation addresses two core problems: consistency and scale.

Amadora structures AI search visibility tracking around projects, where you define:

Your brand and website

A default geo/location

Topics (to group visibility meaningfully)

A set of AI search prompts reflecting real user questions

Once configured, Amadora aggregates AI-generated answers across prompts and provides a baseline view of visibility before you drill into individual prompts.

Metrics that matter (and the ones Amadora tracks)

Visibility score

Visibility score measures the percentage of AI-generated answers in which your brand is mentioned. This answers the most basic question: are we appearing at all?

Share of voice

Share of voice shows how often your brand is mentioned relative to competitors in the same answers, helping you understand whether you are dominant or marginal.

Average position

Average position reflects where your brand appears within the AI-generated answer. Lower values indicate earlier, more prominent mentions.

Sources and citations

Amadora also surfaces sources and citations at the prompt level (domains, URLs, and search query context), helping explain why competitors appear and which sources influence AI answers.

The Usage percentage view highlights which domains contribute most to citations, making it easier to identify pages that shape AI responses.

Manual tracking vs Amadora

While building Amadora, we noticed teams could run dozens of tests and still struggle to compare results week over week, because prompts and outputs vary so much.

Criteria | Manual tracking | Amadora |

|---|---|---|

Setup | Fast | Setup once per project |

Scale | Limited | Systematic prompt tracking |

Consistency | Hard | More repeatable over time |

Competitive context | Manual effort | Share of voice built-in |

Prominence signal | Hard to assess | Average position built-in |

Explanation (“why them?”) | Guesswork | Sources, citations, usage data |

Common mistakes that make AI visibility tracking misleading

Changing prompts every time and calling it a trend

Tracking too few prompts to represent real demand

Ignoring geo or language effects

Measuring only “mentioned / not mentioned”

Not inspecting sources and citations

FAQ

Can AI search visibility be tracked accurately?

Yes, but accuracy depends on repeatability (same prompts, consistent cadence) and enough prompt coverage to represent real demand.

How is AI search visibility different from SEO rankings?

SEO rankings measure page order in results. AI search visibility measures brand presence inside generated answers, where clicks may never occur.

What’s the difference between manual tracking and Amadora?

Manual tracking relies on people running prompts and documenting results. Amadora turns that workflow into structured reporting using Visibility score, Share of voice, and Average position, plus citations and sources.

How often should AI search visibility be measured?

Most teams start with weekly or bi-weekly measurement to spot changes without overreacting to short-term noise.

Do AI systems use the same sources?

They often overlap, but behavior varies by platform and model, which is why reviewing sources and citations is critical.

What to do next

If you want a clear starting point:

Define your topics

Build a prompt set that reflects real discovery and comparison intent

Establish a baseline

Track Visibility score, Share of voice, and Average position

Use citation patterns to guide content and authority-building decisions

If you’re ready to move beyond spreadsheets, the next step is the Amadora setup guide:

How to track AI search visibility with Amadora.ai

Share on social media

You might also like