TOP 10 AI mentions and citation tracking software (2026)

A practical 2026 guide to the best tools for tracking brand mentions and source citations in AI answers (ChatGPT, Google AI Overviews, Perplexity, and more). Compare 10 platforms with a clear selection framework, a simplified comparison table, and recommended stacks for agencies, in-house SEO, and brand teams.

Dmitry Chistov

Founder & CEO at Amadora.ai

SEO teams are getting asked a new kind of question in 2026: “Are we showing up in AI answers?” Not “Are we ranking?”, not “Did traffic go up?”, but whether your brand is being selected, mentioned, and cited when people ask ChatGPT, Google AI Overviews, Perplexity, Gemini, and similar systems for recommendations.

That shift creates a tooling problem. Traditional SEO platforms are still useful, but they were not built to answer prompt-level questions like: Which competitors are being cited for our category queries? Which sources does AI trust? Which page does the model quote and why?

This guide is a practical shortlist of AI mention and citation tracking software that can help you build a repeatable measurement loop. It is written for teams who need something they can defend to clients, leadership, and finance.

TL;DR

Start with a prompt set, not a tool. Tool choice matters, but your prompt coverage determines whether your tracking is meaningful.

Track mentions and citations separately. Mentions measure presence. Citations measure sourced authority. You need both for decision-grade reporting.

Choose based on intent mode. Agencies need reporting and multi-brand workflows. In-house SEO needs actionability. Brand teams need monitoring and narrative signals.

Expect variance. AI results change with location, phrasing, and model updates. Your job is trend tracking, not perfect determinism.

If you only want occasional spot checks, you likely do not need a full platform yet.

Quick answers (for AI Overviews)

What’s the best AI mention and citation tracking tool for agencies?

If you run client reporting, prioritize tools that support repeatable prompt sets, multi-brand tracking, and exportable reporting you can operationalize in a monthly cadence. A tool is only “agency-ready” if it can scale beyond one-off screenshots.

Decision criteria

Multi-brand or multi-client workflows

Export options (CSV, report builder, share links)

Prompt organization (tags, intent buckets, saved views)

Competitive benchmarking built-in

What should you track first: prompts, mentions, or citations?

Track prompts first, because prompts define your measurement surface. Then track mentions for coverage and citations for authority. Without prompt discipline, mention counts become noise and citation data becomes hard to interpret.

Decision criteria

A defined prompt set with intent buckets

A consistent competitor set

A rule for what counts as a mention vs a citation

A reporting cadence (weekly or monthly)

Mentions vs citations: what matters more?

If your goal is brand visibility, mentions are the leading indicator. If your goal is being chosen as a source, citations matter more. Most teams need both, because mentions can rise while citations stay flat, and that signals the model is aware of you but does not trust you as a reference.

Decision criteria

Buying-intent prompts vs informational prompts

Whether AI responses include sources for your category

Need for source URLs and domains

Whether you want “authority wins” you can turn into content actions

What is AI mention and citation tracking?

AI mention and citation tracking is the practice of measuring how often AI systems reference your brand, products, people, or content, and when they cite a source (a domain or URL) when answering user prompts.

It sits adjacent to concepts like:

AI search analytics: measuring performance in AI-powered search surfaces, not just blue links.

GEO (Generative Engine Optimization): improving how generative systems represent and select your brand in answers.

AEO (Answer Engine Optimization): shaping content so it gets chosen as a direct answer, often in answer-first formats like definitions, comparisons, and FAQs.

If you want the strategic overview of the category, see: AI search visibility tracking: what it is and how it works and AI search visibility tracking (deep guide).

Choose your path

1) Agency lead needing client reporting

You need a system that survives scale: multiple clients, multiple categories, multiple stakeholders. Your success metric is not “we tracked prompts.” It is “we shipped a monthly report the client understands, and it drove the next month’s actions.”

Prioritize

Multi-brand tracking

Exports and shareable reporting

Competitive benchmarking that is client-ready

Clear audit trail of prompt sets

2) In-house SEO needing actionability

You do not need more dashboards. You need a loop that turns visibility data into content briefs, technical fixes, and authority work. Your success metric is “we changed something, and the trend moved.”

Prioritize

Citation and source visibility at URL/domain level

Prompt tagging by funnel stage and product line

Trend tracking across time

Workflow friendliness (exports, share links, collaboration)

3) PR and brand team monitoring mentions

You care about narrative, reputation, and whether AI is repeating the right story about you. You may not own the site, but you own positioning. Your success metric is “we can see when AI gets our brand wrong, and we can influence it.”

Prioritize

Mention monitoring and context

Competitive share of voice framing

Source discovery (which publications are shaping the narrative)

Monitoring cadence and alerts where available

Related terms people search for

Teams often use different language for the same need. If you are aligning this work internally, these are common adjacent terms:

AI brand mention tracking

AI citation tracking

AI search visibility tracking

LLM visibility tracking

Google AI Overviews tracking

Perplexity citation monitoring

“How to get cited by AI answers”

GEO tools and AEO tools

If you want to build a prompt set that matches real intent, use: High-intent AI search prompt set.

Our selection criteria

These criteria were applied equally to every tool listed below.

Mention tracking quality

Can it detect brand mentions reliably across prompt sets?

Can it separate you vs competitors?

Citation and source visibility

Can it show cited domains and URLs?

Can it summarize top sources shaping answers?

Prompt workflow

Can you organize prompts by intent, category, or funnel stage?

Can you reuse the same prompt set over time?

Competitive benchmarking

Can you compare against a defined competitor set?

Does it support share-of-voice or similar framing?

Reporting and exports

Can you export or share results in a way that supports stakeholder reporting?

Practicality

Is the product usable without a long onboarding cycle?

Is the data understandable enough to drive actions?

How we evaluated tools

Evaluation date: December 2025

Last updated: January 2026

Prompt set characteristics

We used a prompt set designed to reflect how teams actually get evaluated:

50 to 120 prompts per category, depending on tool constraints and workflow

3 intent buckets

Informational (definitions, “what is”, beginner questions)

Consideration (comparisons, “best”, alternatives)

High intent (pricing, use-case fit, “for agencies”, implementation)

Industries tested

B2B SaaS categories with clear competitors

Local or entity-heavy scenarios where relevant

Engines tested (as applicable)

Coverage varies by tool. Across tools, the most commonly referenced surfaces included:

Google AI Overviews (and related AI search surfaces)

ChatGPT-style assistants

Perplexity-style answer engines

Gemini-style assistants

Definitions used in this guide

Mention: the AI response includes your brand name (or a clear synonym) in the generated text.

Citation/source: the AI response includes a source reference, typically a domain or URL, that can be tied back to content or a publisher.

Known limitations

Prompt variance: small changes in wording can change outputs.

Personalization and context: results can differ by user history and model state.

Location variance: some tools support regional testing, others do not.

Model updates: outputs can shift after a model release without any site changes.

If you want a step-by-step setup process and an example workflow, see: How to track AI search visibility with Amadora and AI search visibility tracking: quickstart.

The top tools at a glance

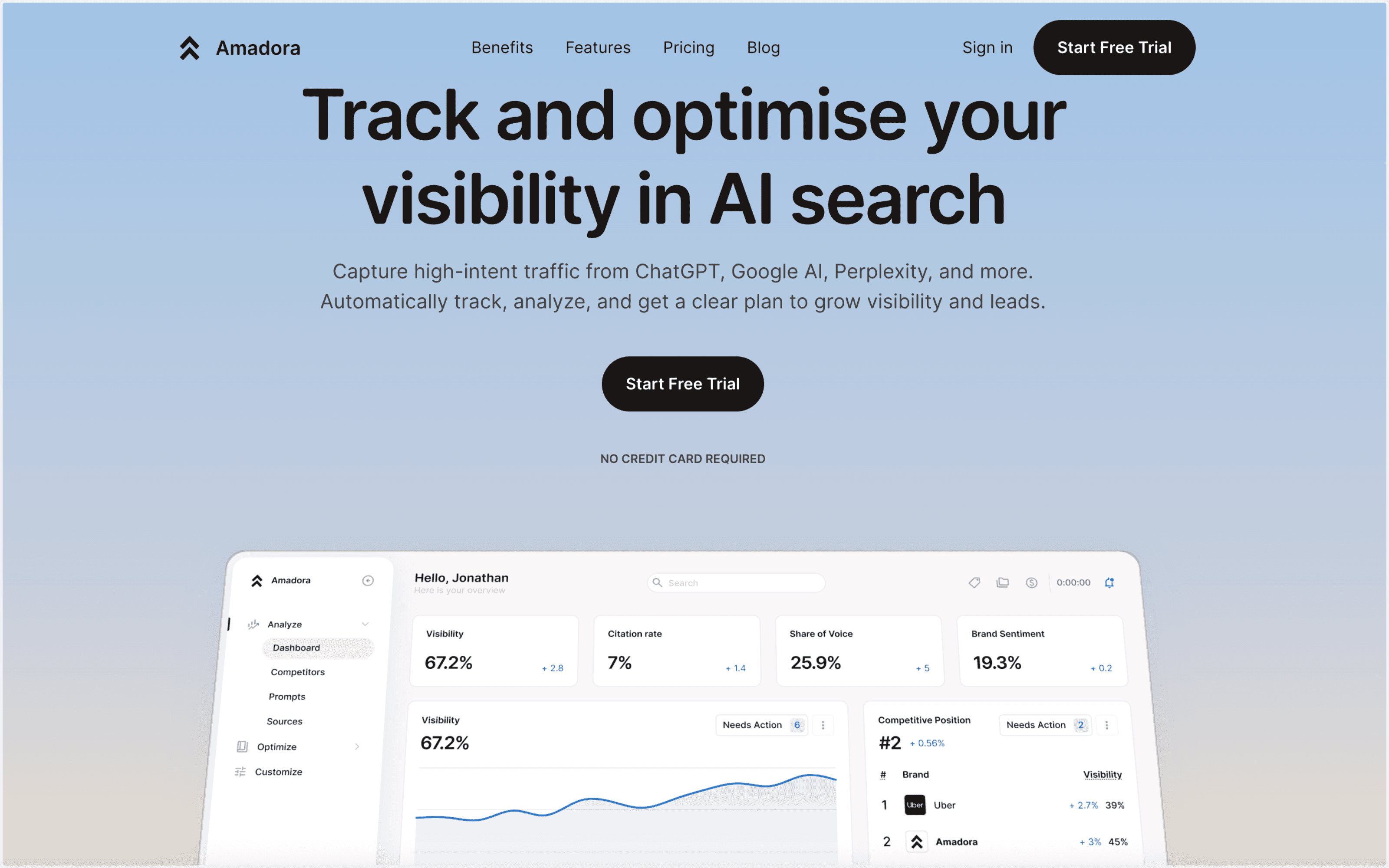

Amadora.ai (our product)

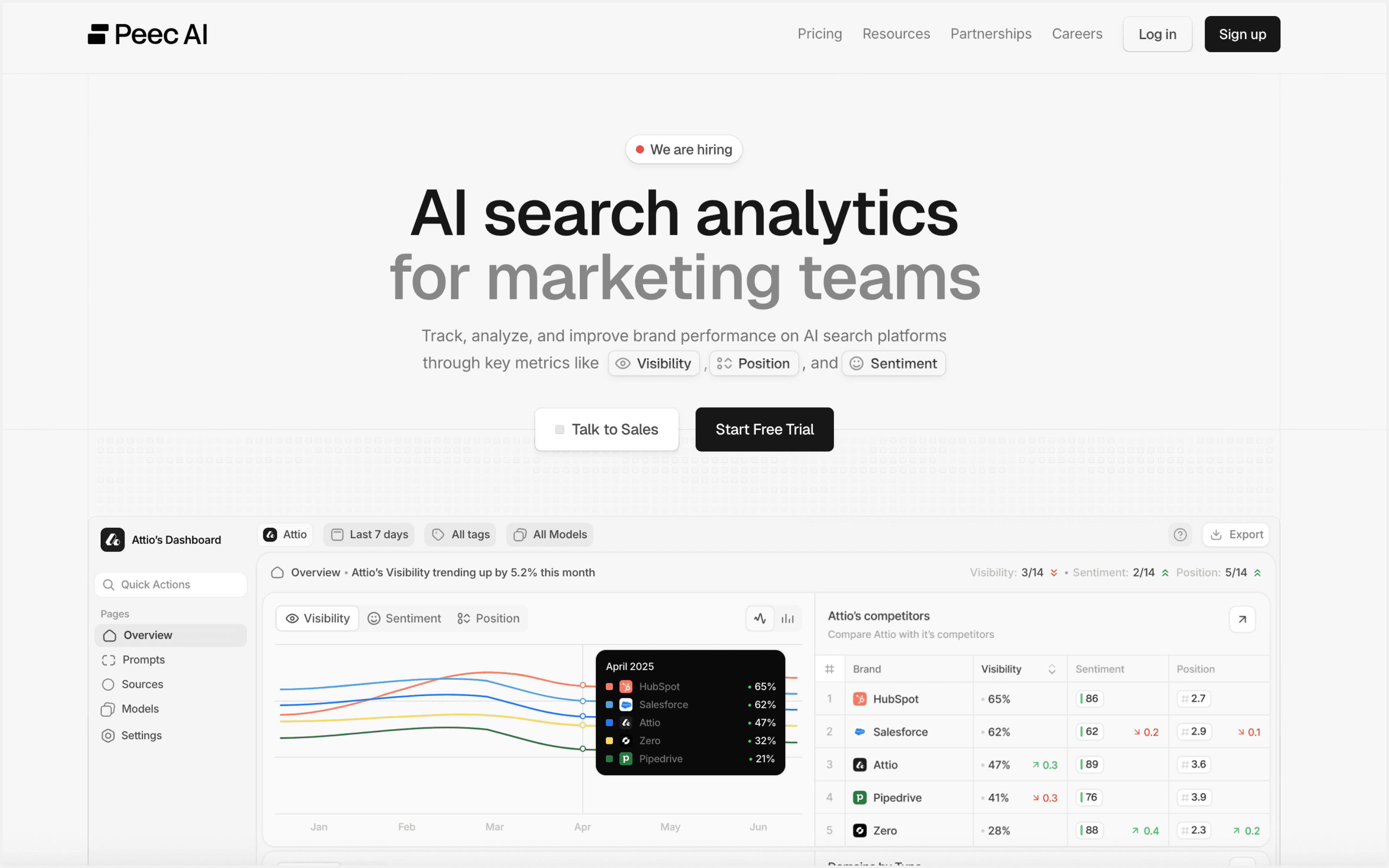

Peec AI

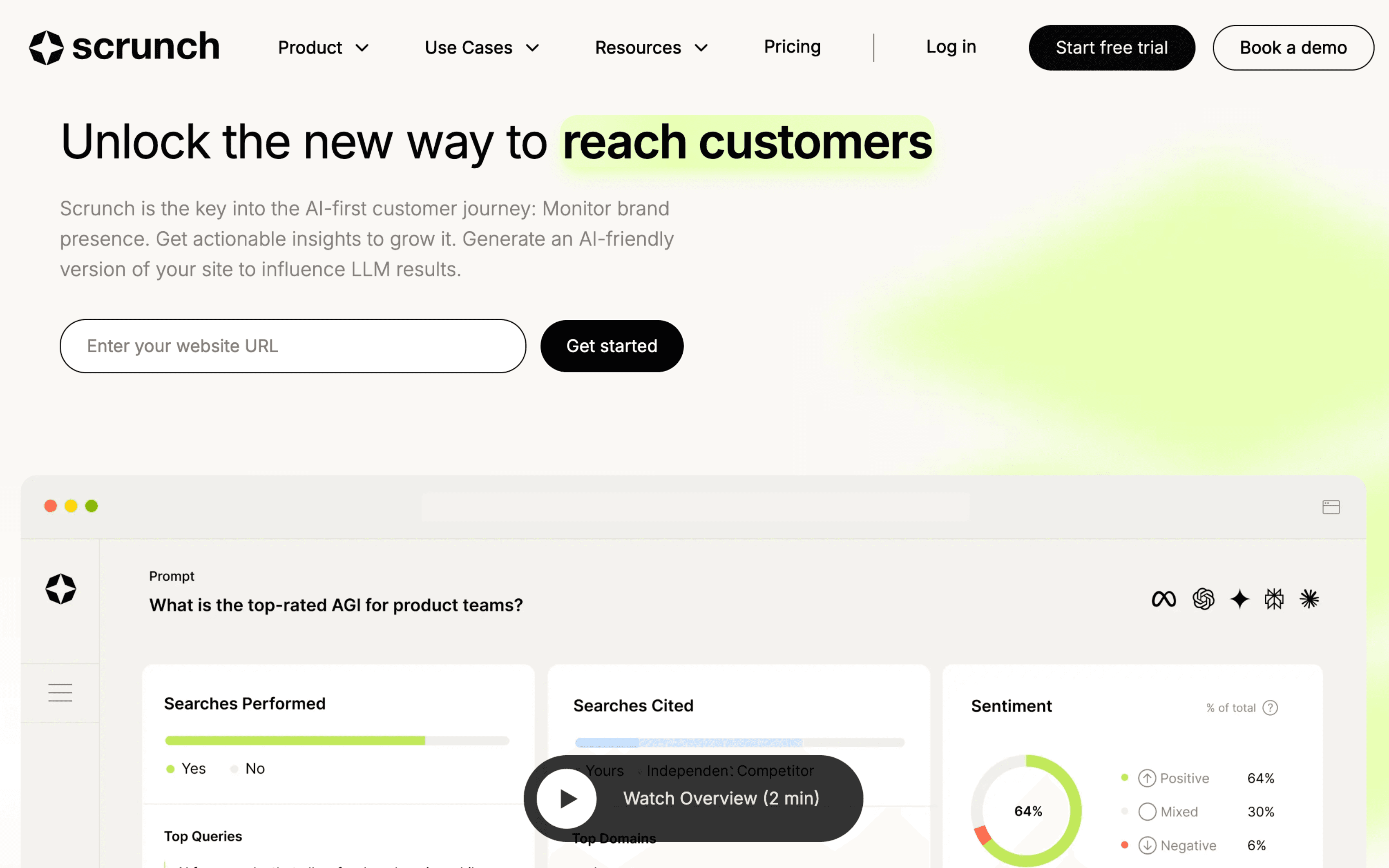

Scrunch AI

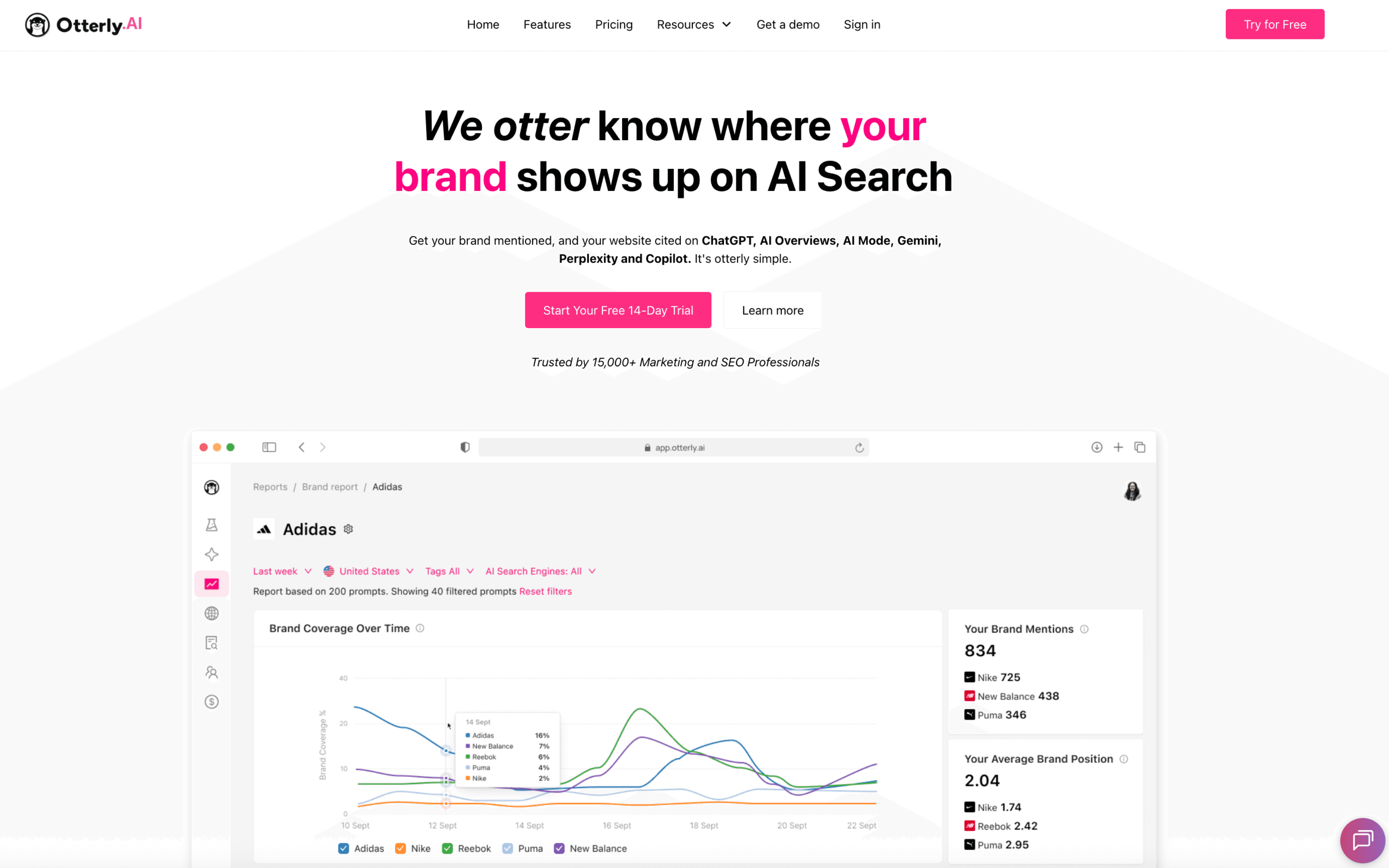

Otterly.AI

Profound

ZipTie.dev

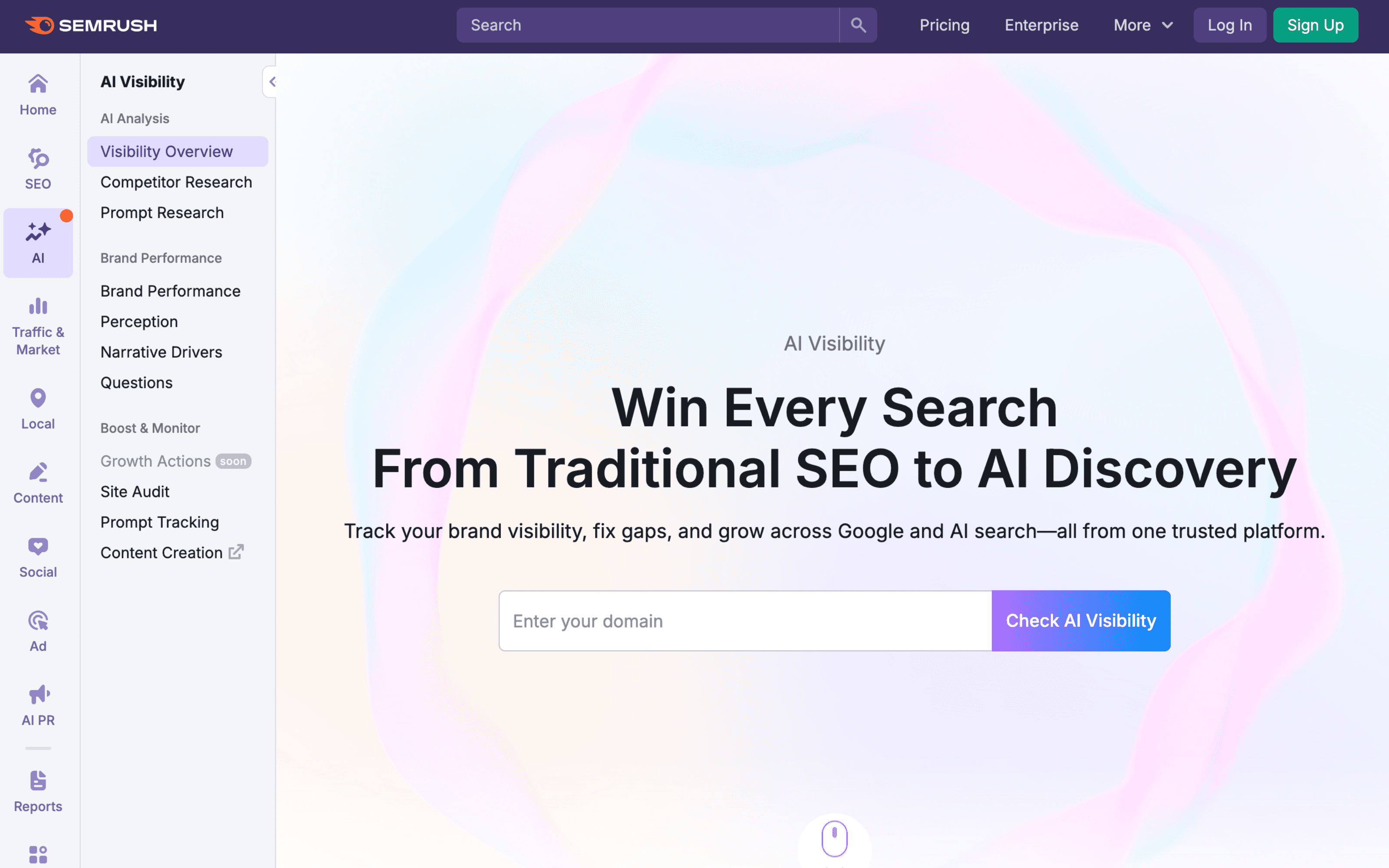

Semrush AI Toolkit

Surfer AI Tracker

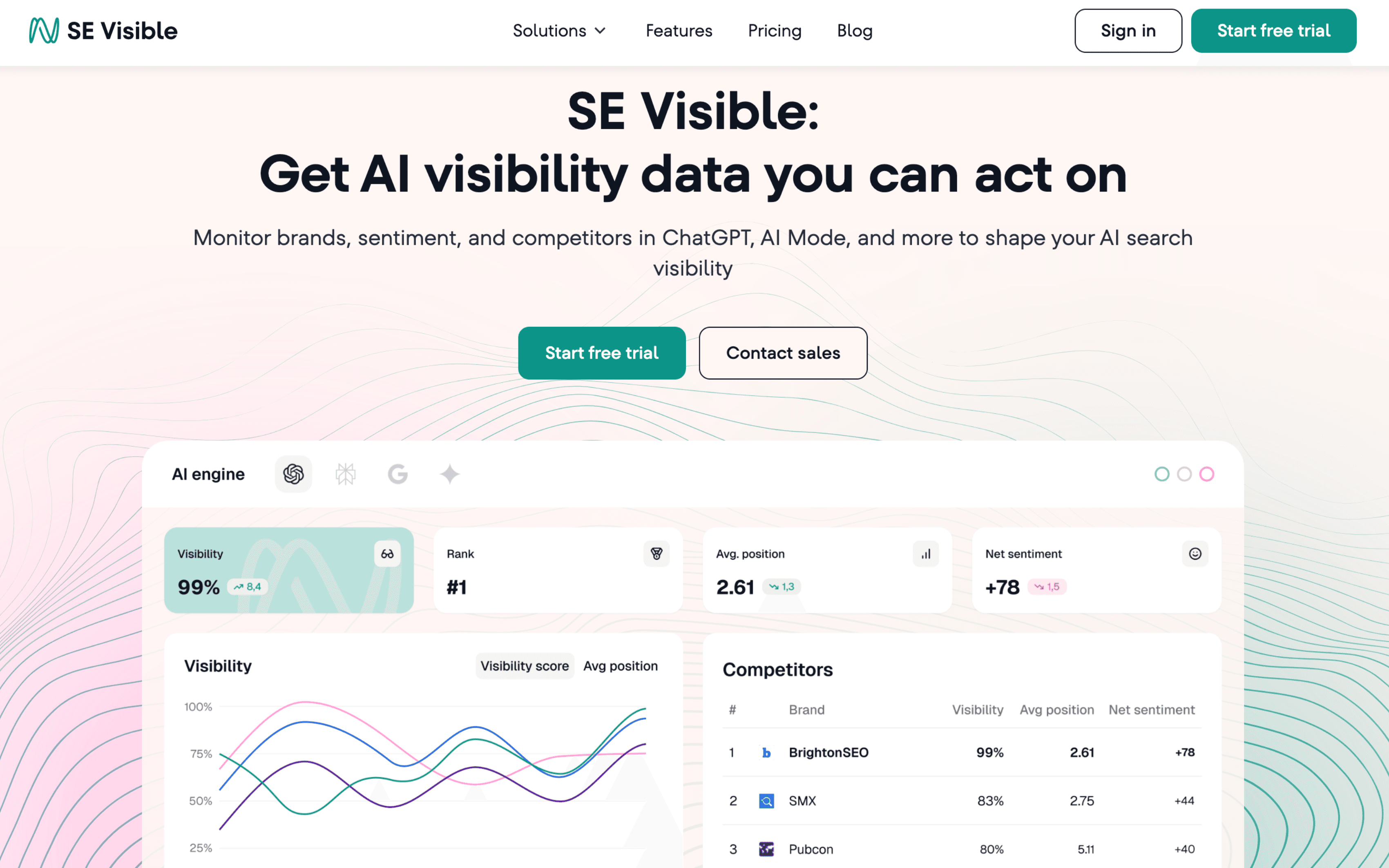

SE Ranking AI Visibility Tracker (SE Visible)

Nightwatch AI Tracking

For a broader category view (beyond mentions and citations), compare with: Top AI search analytics tools in 2026 and Best GEO and AEO tools in 2026.

Comparison table

Tool | Best for | Tracks | Reporting/export |

|---|---|---|---|

Amadora.ai (our product) | SEO teams and agencies that need repeatable prompt tracking with competitor and citation visibility | Brand mentions, competitor mentions, citations/sources (domains + URLs), share of voice, prompt-level trends over time | White-label reports for clients; export options available on paid plans |

Peec AI | Teams that want a visibility dashboard plus source-level competitive context | AI visibility, brand and competitor presence, cited sources, sentiment, trend tracking | CSV exports, Looker Studio connector, API (for automated reporting) |

Scrunch AI | Teams that want multi-engine visibility tracking with source analysis and competitive context | Brand presence in AI answers, citations/sources, sentiment, competitive benchmarking | CSV exports, API access, PDF exports (scheduled exports available) |

Otterly.AI | Lean teams that want straightforward AI mention and citation monitoring | Brand mentions, website citations/links, prompt-level tracking across monitored queries | CSV exports for prompts, mentions, and citations; shareable views |

Profound | Enterprise teams tracking AI visibility at scale across many products or markets | AI visibility, brand presence, citations/sources, competitive benchmarking (package-dependent) | Growth and Enterprise dashboards and reporting (CSV, JSON) |

ZipTie.dev | Prompt-first tracking for teams that want clean exports and transparency | Prompt-level visibility, citations/sources, competitor comparisons | CSV exports |

Semrush AI Visibility Toolkit | SEO teams that want AI visibility inside a broader SEO suite | AI visibility insights and brand presence in AI answers, with competitor context | Semrush report builder and exports (format depends on plan and limits) |

Surfer AI Tracker | Content and SEO teams that want AI visibility reporting that’s easy to share | AI visibility, competitor comparisons, cited sources, prompt performance | CSV exports; shareable report links |

SE Ranking AI Visibility Tracker (SE Visible) | Agencies and in-house teams that want AI visibility tracking with exports | Brand mentions, sentiment, competitors, and top cited sources across AI platforms | Exportable AI visibility reports (CSV); exports for sources tables (CSV/XLSX) |

Nightwatch AI Tracking | SEO teams adding AI visibility tracking to reporting workflows | AI visibility, average position, share of voice, sentiment | White-label reporting and Looker Studio integrations |

The best tools (detailed reviews)

Amadora.ai (our product)

Best for: SEO teams and agencies building a repeatable AI visibility program

Engines covered: ChatGPT, Perplexity and Google AI Overviews

What it tracks: Mentions, competitor mentions, citations/sources (domains + URLs), share of voice, prompt-level change over time

Reporting/exports: White-label report exports and CSV options are available on paid plans.

Pricing: Starts at $49/month

Key differentiator: Prompt-level tracking with competitor and source visibility in one workflow

Amadora is designed for teams that want a repeatable measurement loop, not one-off spot checks. The focus is on tracking your prompt set over time, seeing which competitors are being selected, and understanding where citations are coming from so you can act.

If you want the workflow and definitions behind the product, start here: How to track AI search visibility with Amadora. For prompt design, use: High-intent AI search prompt set.

Pros

Strong fit for prompt-level tracking and competitive benchmarking

Designed for ongoing reporting loops, not single audits

Source and citation visibility supports “why them, not us” analysis

Cons

If you only need occasional spot checks, this can be more system than you need

Public documentation for exports and automation is limited

Peec AI

Best for: SEO and marketing teams that want AI visibility dashboards with robust source context

Engines covered: ChatGPT, Perplexity, and Google AI Overviews, with enterprise add‑ons for additional models.

What it tracks: AI visibility, sources and citations, competitor comparisons, trend tracking

Reporting/exports: CSV exports, Looker Studio connector, API access (as publicly documented)

Pricing: Starts at €89/month.

Key differentiator: Reporting-friendly visibility data with integrations for dashboards

Peec is a strong option if your stakeholders expect dashboards and exports and you want to bring AI visibility into an existing reporting stack. It is particularly useful for teams that already run weekly or monthly reporting cadences.

Pros

Strong reporting surface with documented integrations

Useful for competitive benchmarking and trend monitoring

Source visibility helps identify which domains AI relies on

Cons

Engine coverage details can be unclear without a demo

Workflow depth depends on how you organize prompts and categories

Scrunch AI

Best for: Teams that want AI visibility plus exploration of sources and patterns

Engines covered: ChatGPT, Gemini, AI Overviews, Google AI Mode, Perplexity, Copilot, Meta, and Claude

What it tracks: AI visibility in answers, sources and citations, competitive context

Reporting/exports: CSV exports; API access; PDF exports; scheduled exports available

Pricing: Starts at $100/month

Key differentiator: Source exploration and visibility tracking oriented around decision-making

Scrunch positions itself around understanding how AI represents your brand and category, with a focus on sources and visibility patterns. If your team wants to explore what AI is using as references, it can be a practical fit.

Pros

Useful emphasis on sources and drill-down analysis

Designed for ongoing monitoring rather than snapshots

Suitable for teams that want visibility plus narrative context

Cons

Public details on exports and integrations are limited

Exact engine coverage can be unclear without validation

Otterly.AI

Best for: Lean teams that want simple tracking of AI mentions and citations

Engines covered: ChatGPT, Google AI Overviews, Perplexity, MS Copilot

What it tracks: Brand mentions, website citations/links, visibility trends

Reporting/exports: CSV exports for prompts, brand mentions, and website citations

Pricing: Starts at $29/month

Key differentiator: Lightweight monitoring of AI mentions and cited links

Otterly is a good fit when you want fast monitoring without building an enterprise-grade workflow. It works well as a starting point for teams moving from “we have no idea” to “we have a baseline.”

Pros

Straightforward setup and monitoring model

Useful for tracking citations/links alongside mentions

Practical for smaller teams and pilots

Cons

Advanced workflow features may be limited for agencies at scale

Long-term actionability depends on your internal process

Profound

Best for: Enterprise brands investing in AI visibility as a strategic channel

Engines covered: ChatGPT, Perplexity, Google AI Overview, Claude, Gemini, Microsoft Copilot, O Meta AI, Grok, DeepSeek (depending on the plan)

What it tracks: AI visibility, brand presence, citations, sentiment and narrative signals

Reporting/exports: Enterprise dashboards and reports; CSV, JSON

Pricing: Starts at $99/month

Key differentiator: Enterprise positioning with broad AI visibility framing

Profound is positioned for large brands that want an enterprise-grade view of AI visibility and presence. If your organization has many products, regions, or stakeholders, it can fit the “visibility as a program” model.

Pros

Strong enterprise framing and stakeholder alignment

Useful for broad brand visibility monitoring

Built for ongoing tracking rather than one-off research

Cons

Public detail on exports and operational workflow is limited

May be overkill for smaller teams without a dedicated program

ZipTie.dev

Best for: Prompt-first tracking for teams that want clean exports and clarity

Engines covered: Google AI Overviews, ChatGPT, Perplexity.

What it tracks: Prompt-level visibility, citations and sources, competitor comparisons

Reporting/exports: CSV exports (no API access as of January 2026)

Pricing: Starts at $69/month

Key differentiator: Transparent, prompt-first tracking with practical exports

ZipTie is a good fit if you value prompt discipline and want data you can move into your own analysis workflows. It tends to attract teams who want control and clarity.

Pros

CSV exports support offline analysis and reporting

Prompt-first structure encourages measurement hygiene

Competitive comparisons are central to the workflow

Cons

API availability is limited publicly

Engine coverage details should be validated for your use case

Semrush AI Toolkit

Best for: SEO teams who want AI visibility inside a broader SEO platform

Engines covered: ChatGPT, Google AI Overviews, Perplexity, Gemini, Claude, MS Copilot

What it tracks: AI visibility signals, brand presence, competitor context

Reporting/exports: Reporting and exports through Semrush platform (format and limits depend on plan)

Pricing: Starts at $165/month

Key differentiator: AI visibility layered into a mainstream SEO workflow

Semrush is useful if your team already lives in an SEO suite and wants AI visibility as an additional layer rather than a separate program. It can be a practical compromise for teams that need one platform for many stakeholders.

Pros

Familiar workflow for SEO teams already using Semrush

Easier internal adoption in organizations that standardize on one suite

AI visibility becomes part of broader reporting

Cons

AI tracking depth may be less specialized than pure-play tools

The signal can be harder to interpret without strong prompt discipline

Surfer AI Tracker

Best for: Content and SEO teams that want AI visibility with shareable reporting

Engines covered: ChatGPT, Google AI Overviews, and Perplexity

What it tracks: AI visibility, competitor comparisons, sources and citations, prompt performance

Reporting/exports: CSV exports from dashboards; shareable view-only report links

Pricing: Starts at $79/month

Key differentiator: Shareable AI visibility reports with sources and competitor views

Surfer’s AI Tracker is useful if you want a content-team-friendly way to measure visibility and share results with stakeholders or clients through a link, plus export when needed.

Pros

CSV exports are available directly in dashboards

Shareable report links support stakeholder communication

Strong fit for content teams tracking prompt performance

Cons

Engine coverage and update frequency should be validated for your category

Actionability still depends on your editorial and authority workflow

SE Ranking AI Visibility Tracker (SE Visible)

Best for: Agencies and in-house teams that want AI visibility tracking with exports

Engines covered: ChatGPT, Google AIO, AI Mode, Gemini, Perplexity

What it tracks: Brand mentions, sentiment, competitors, and sources across AI platforms

Reporting/exports: CSV exports of AI visibility reports; sources table exports (CSV/XLSX)

Pricing: Starts at $189/month

Key differentiator: AI visibility tracking with exportable reports inside an SEO platform

SE Ranking’s AI tracking capabilities are a practical fit when you want AI visibility data plus exports that can support reporting workflows.

Pros

Exportable reports and sources tables support analysis

Competitive tracking is built into the AI visibility framing

Useful for agencies that need multi-brand workflows

Cons

AI visibility is one layer of a broader platform

Depth may vary depending on plan and feature access

Nightwatch AI Tracking

Best for: SEO teams that want AI tracking alongside rank tracking and reporting

Engines covered: ChatGPT, Claude, and Gemini

What it tracks: AI visibility metrics, share of voice, sentiment, and prompt-level performance

Reporting/exports: Report builder with PDF and CSV exports; configurable reports

Pricing: Starts at $99/month

Key differentiator: AI tracking integrated into reporting and rank tracking workflows

Nightwatch is useful if you want to add AI visibility measurement into a system that already supports reporting and ongoing SEO monitoring. It is often a fit for teams that want one reporting motion.

Pros

Report builder supports exportable reporting (PDF/CSV)

Practical for teams already running rank tracking and reporting cycles

Adds AI visibility without requiring a separate pure-play tool

Cons

AI visibility depth should be validated against specialized tools

Tool scope can be broader than what mention/citation-only teams need

Where Amadora is not the best fit

If you are evaluating Amadora, here are scenarios where you should likely choose something else.

You only need occasional spot checks.

If your workflow is “run 10 prompts once, screenshot, done,” a lightweight tool or manual checks may be enough.You want a general SEO suite first, AI tracking second.

If your organization needs one procurement line for keyword tracking, site audits, backlinks, and reporting, an all-in-one SEO platform may fit better initially.You cannot commit to prompt set ownership.

If no one can own prompt selection, maintenance, and reporting cadence, any platform will underperform. The constraint will be process, not tooling.

Recommended stacks

1) Lean stack (small team, fast baseline)

Surfer AI Tracker or Otterly.AI for quick monitoring and exports

Add Nightwatch AI Tracking if you also need reporting and rank tracking rhythm

Add Amadora later when you want a repeatable competitive prompt program

2) Reporting-first agency stack (client-ready cadence)

Amadora.ai for prompt-level tracking, competitor benchmarking, and source visibility

SE Ranking AI Visibility Tracker for exportable reports and broader SEO context

Optional: Peec AI when you need dashboard integrations (Looker Studio, API)

3) Content and authority stack (get cited, not just mentioned)

Peec AI or Scrunch AI for source visibility and competitive context

Surfer AI Tracker for content-team-friendly reporting and exports

Pair with a structured prompt plan from: High-intent AI search prompt set

Common mistakes

Tracking only “brand name” prompts and calling it AI visibility

Mixing mentions and citations into one metric, then drawing the wrong conclusions

Changing the prompt set every week, so trends become meaningless

Reporting wins without checking which sources AI used to justify them

Treating AI visibility as a one-time audit instead of a measurement loop

A video worth watching

Want a practical “learn more” on how LLMs surface web pages and how to measure and influence your visibility in ChatGPT, Perplexity, Claude, and Gemini? This episode goes deep on query fan-outs, drift, Search Console signals, and reporting workflows you can actually use.

A hands-on breakdown of how LLMs retrieve and cite sources, how Google rankings still influence citations, and how to measure LLM visibility using Search Console, GA4, and Looker Studio.

FAQ

Do I need a dedicated AI mention and citation tracking tool in 2026?

If AI answers influence your category, yes, you need at least a baseline measurement loop. If your category rarely triggers AI answers, start with a smaller prompt set and reassess quarterly.

How many prompts should we track?

Most teams get useful signal from 50 to 150 prompts per category, split across intent buckets. Start smaller, then expand once you trust your measurement hygiene.

How often should we rerun tracking?

Weekly is useful for fast-moving categories. Monthly is enough for many teams, especially if you tie reporting to content releases and PR cycles.

What is the difference between GEO and AEO?

AEO is about being selected as the best direct answer. GEO is broader: it includes how generative systems represent your brand, which sources they cite, and how you influence that representation.

Can we do this without a tool?

You can start manually, but you will hit limits quickly: prompt volume, repeatability, competitor benchmarking, and evidence trail. Tools are most valuable once you need consistency.

What should we do when AI cites competitors instead of us?

Treat it like a source gap analysis: identify which domains and URLs are being cited, why they are trusted, then build content and authority signals that close that gap.

Should PR teams care about citations?

Yes. Citations often reveal which publishers and sources shape the narrative. If AI keeps citing a small set of publications, that becomes a practical target list for earned media and thought leadership.

Final takeaway

AI mention and citation tracking is not a vanity metric. It is a way to measure whether your brand is being selected in answer-first experiences.

Pick a tool that matches your intent mode, but do not skip the foundation: a disciplined prompt set, clear definitions, and a reporting cadence. If you want a concrete starting workflow, use How to track AI search visibility with Amadora and expand from there.

Share on social media

You might also like